I’ve been discussing evaluation for a while now. From identifying why we need principles to support our evaluation strategy, to potential metrics and places to collect performance data. One thing I realised I hadn’t gone into detail was the reasons why we need to offer up and report different interactions with learning and performance outcomes.

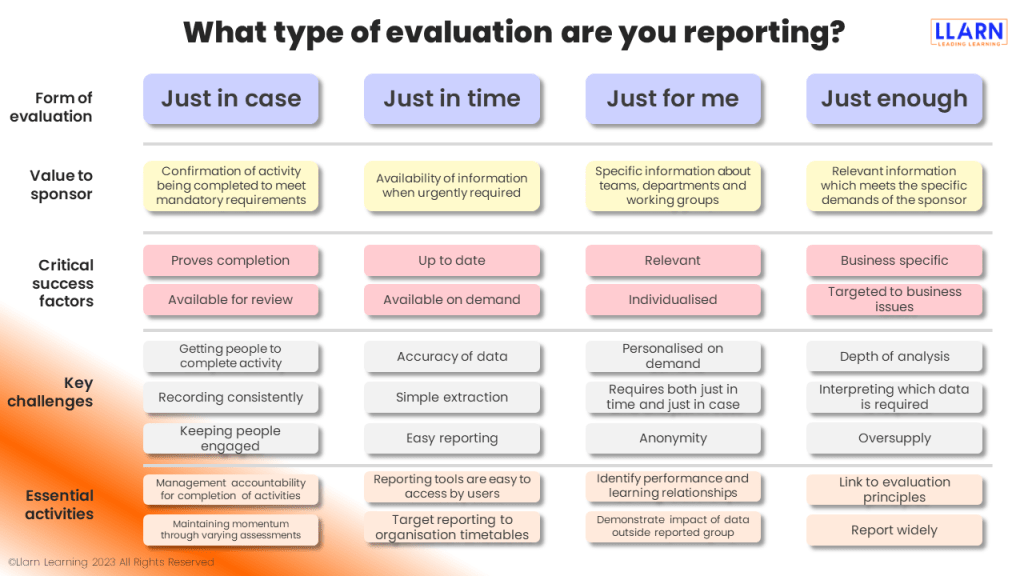

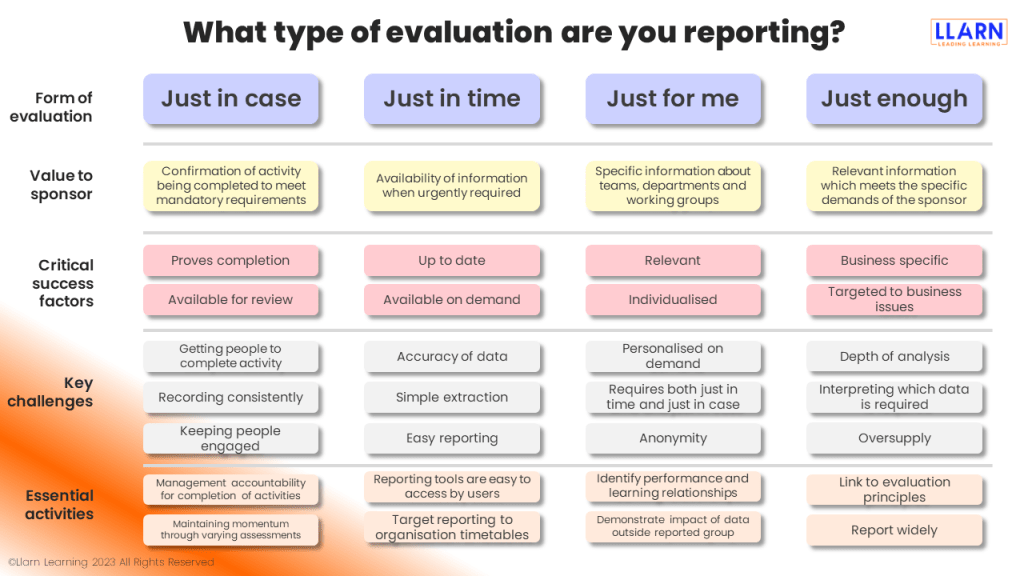

As learning reflects modern working, there’s been a shift from just in case (JIC), to just in time (JIT), to just for me(JFM), and just enough (JE) learning. The same can be said for evaluation expectations. These 4 domains are where evaluation is required. We produce JIC data to meet regulatory expectations. JIT reporting is the ability to collect up to the minute data. Whether we can analyse it is a different matter but the ability to collect ‘in real time’ is part of our approach. The JFM domain is where we wish to personalise the reporting. We should be able to personalise to an individual level; in practice it means simply producing data for one team, department or function. The JE domain is where learning hasn’t got yet. It’s where managers don’t care if people enjoyed the training but whether it made any difference to how they perform.

In the past – and in many places now – we collect JIC learning data which is dressed up as evaluation. Knowing why we need it is never questioned although we all assume it is in case we are audited. I thought I should pull this apart a bit and have broken the 4 domains of evaluation need down into the attached table.

This is my first run at trying to identify some of the relevant factors across each of these domains and would appreciate your thoughts and comments.